In my last post on Robots, NASA, and The Future of Space Exploration I discussed the NASA-wide Robotics Field Test that I participated in this summer. My project, which I worked on with my colleague DW Wheeler, was to demonstrate planning and control of the ATHLETE Robot walking over a rocky terrain using the “Footfall Planning” software we have been developing for the last four years.

I’m interested in robotics in order to learn about our own lives and experiences from the effort of designing machines that can handle similar challenges to what we face on a daily basis. One of these challenges is the simple task of walking. Decomposing the act of walking, what are the fundamental problems which must be solved? A four-meter tall, six legged robot with wheels on the ends of its legs and electric motors instead of muscles is very different from a flesh and bones human. Yet, despite the differences, digging into the problem of walking you expose the underlying aspects which are independent of metal, flesh, two legs, four legs, or even six.

Of the many insights that came from developing the FootFall Planner, I am fascinated about the connection between walking and self-awareness. Sharing this connection starts by describing the ATHLETE robot and then giving an overview of how walking is commanded.

About ATHLETE

ATHLETE is an awe-inspiring robot to work with, and I consider myself privileged to have collaborated with the team from NASA’s Jet Propulsion Laboratory in California (JPL) that built the robot. Being designed to help construct a lunar base and carry large pieces of infrastructure from the lander long distances across complex lunar terrain, ATHLETE is large and impressive. The current second-generation prototype is one half the anticipated lunar scale. The robot stands to a maximum height of just over four meters, and has a payload capacity of 450 kg in Earth gravity. Each of its six legs has seven joints, and a wheel at the end that allows it to drive over smooth terrain and walk over rough terrain. This duality of movement style is ideal for robots operating in unstructured natural environments. The most appropriate mode of locomotion can be selected depending on the conditions. Driving provides fast, energy efficient motion but is limited to smooth terrain, while walking is slower and less efficient but also more robust to obstacles and challenging terrain.

How ATHLETE Walks

In general, walking is composed of many tasks including: taking a single purposeful step, generating rhythmic gaits, and using sensory feedback to feel the force of contact with the ground and dynamically balancing even as the ground shifts underfoot (such as when on sand or soft dirt). In the FootFall Planner we worked on taking purposeful steps and generating gait sequences for the 6 legs. In this post I will focus on the process for taking a single intentional step. The process starts by using ATHLETE’s cameras to build a model of the surrounding terrain, then projecting the robots current pose into the terrain model, and then planning a sequence of motions and trajectories for each leg joint such that the robot avoids hitting itself or any part of the terrain.

View of the Apollo 15 landing site showing Hadley Rille, Hadley C crater, and the Appenine Mountains. This 3D view was created from original Apollo orbital images using the NASA Ames Stereo Pipeline.

Most of the cameras on ATHLETE come in pairs, like a pair of eyes. After requesting images to be taken, each returned stereo pair is processed to build a 3D model of the terrain. We do this by using a piece of open source software called the Ames Stereo Pipeline, which is part of the NASA Vision Workbench. These tools were built in our research group, The Intelligent Robotics Group, and are designed to provide flexible and extensible support for advanced computer vision algorithms. Our group uses these vision tools for tasks as diverse as building 3D models of the Moon and Mars from satellite data, to robotic navigation tasks where local 3D terrain models are needed so the robot can plan a safe route around rocks and other hazards.

In the FootFall Planning System, once ATHLETE has taken images and the local 3D terrain model is built, the operator can select a new location on the ground to move a leg to. This initiates the motion planning process which takes the terrain model, the current configuration of the robot, and the desired foot placement and starts searching through a seven-dimensional space of possible leg positions until it finds an efficient motion plan which avoids any collisions with itself or the terrain. The search space is seven-dimensional because the leg has seven joints, each of which can be controlled through a range of motion. As each potential position of the leg is evaluated a collision-checking algorithm takes the proposed position of the leg and determines if the robot would be in collision with itself or the terrain. Once the motion planner finds a sequence of collision-free motions that achieve the desired foot placement, a command sequence is returned to the operator who can inspect the planned motions and preview the results on a virtual model of the robot. If the command sequence looks safe it can be sent to the robot for actual execution.

Self Awareness

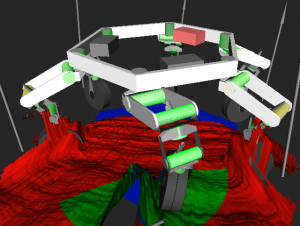

Self-imaging: Stereo reconstruction will include the legs as part of the terrain. In this image the front leg has been moved aside in order to highlight the impact it had on the terrain model. The colors of the terrain indicate which parts are reachable (green) by the leg.

This algorithmic process suddenly becomes philosophically interesting when the robot can see its own legs and feet in its camera images. Implementing 3D terrain modeling algorithms naively results in everything that is visible in the camera image being modeled as part of the terrain. Thus, if part of the leg and foot are visible, they end up in the terrain model and the motion planner treats them as obstacles and will not let the robot move through those spaces. But since that is exactly where the leg currently stands, the motion planner complains that the robot is already in collision and cannot move in any direction safely. This confusion stems from the fact that the robot knows where its own body is in space, but it does not recognize its own body in the images of the surrounding terrain.

The solution to this is to implement algorithms which can identify the robots limbs in the images, remove them, and then build the terrain map that is used by the motion planner. In other words, the robot must be able to visually distinguish between Self and Other in its field of view. This can be done by combining a projection of the robots knowledge of its leg position into the camera image, and using that location as the starting point for a process that decides which pixels in the image are part of the leg and which are not. Once all the pixels that are part of the robot are removed from the image, the remaining terrain can be processed and motions safely planned.

Unlike ATHLETE, we humans do not necessarily place our feet with such careful attention when we walk — we usually do not look directly at our feet and instead rely heavily on rhythmic gaits and our ability to dynamically balance ourselves. But when we use our hands to manipulate the world we often take a similar purposeful approach of looking, modeling, and reasoning about our motions. In fact, looking at the six legged ATHLETE, one can think of the way it walks as being very similar to a large hand grasping the ground and walking by manipulating the ground with its six fingers.

Origins of Self-Consciousness?

The philosophically exciting aspect of this work is that it shows how the basic computational requirements of moving purposefully through the world require the ability to differentiate between Self and Other. It appears that we do this for many different tasks: from walking, to manipulating objects, to how we can distinguish our own voice from all the other sounds we hear. I even read some papers which indicate that when we feel pressure on our skin from touching another object, we experience the same contact force differently if it is generated externally or as a result of our own motion. So, in every sensory modality that we have, we find the same need to separate Self from Other in order to make use of the data.

And what would happen if we were not able to separate Self from Other? In the following TED talk Jill Taylor, a Neuroanatomist, describes her experience of having a stroke, and how that gave her profound insight into the nature of Self and Other. Around 8 minutes into the talk she discusses the moment when she lost her ability to differentiate her own arm from the surrounding scene. As she describes it, the experience is beautiful — she loses the sense of her own body and identity and feels completely connected to everything — but those moments also leave her completely incapacitated. During waves of normal thought she regains her sense of Self and is able to perform tasks, like saving her life by calling for help.

If the ability to differentiate Self from Other evolved in order to enable intentional motion and manipulation in the physical world, it seems possible that this capability for modeling ones Self separate from the environment has been built upon and used in increasingly complex ways over the generations. Increasing this “self-modeling” capability allows us to reason about potential outcomes of actions and become better predictors of how we can influence the surrounding environment. Building on the same principles, a really complex model of ourselves enables us to reason about our own thinking, and optimize basic behaviors. That capacity for self-reflection allows us to question how much attention we should give to our model of Self and how much attention should we give to our connection with Other. After all, while it is clearly functional to be able to think separately about our Self from the environment, we do not exist in isolation, and our hearts seem to sing the brightest when we experience connection.

Further Technical Reading

If you would like to dig deeper into the FootFall Planning System, we have written a number of papers that describe the algorithms and technologies in much greater detail. The first paper listed below gives a good overview of the system as it was in 2008, and the following papers go into greater detail on specific aspects. A new paper that summarizes the entire 4 year project has just been submitted to the Journal of Field Robotics, and will hopefully be accepted for publication later this year.

Vytas SunSprial, Daniel Chavez-Clemente, Michael Broxton, Leslie Keely, Patrick Milhelich, “FootFall: A Ground Based Operations Toolset Enabling Walking for the ATHLETE Rover,” In proceedings, AIAA Space2008, San Diego, California, Sept. 2008.

Dawn Wheeler, Daniel Chavez-Clemente, Vytas SunSpiral, “FootSpring: A Compliance Model for the ATHLETE Family of Robots,” In proceedings, 10th International Symposium on Artificial Intelligence, Robotics and Automation in Space, (i-SAIRAS 2010). Sapporo, Japan, August 2010.

T. Smith, D. Chavez-Clemente, “A Practical Comparison of Motion Planning Techniques for Robotic Legs in Environments with Obstacles,” In Proceedings of the Third IEEE International Conference on Space Mission Challenges for Information Technology. Pasadena, CA. July 2009.

Tristan Smith, Javier Barreiro, David Smith, Vytas Sunspiral, Daniel Chavez, “Athlete’s Feet: Multi-Resolution Planning for a Hexapod Robot.” In proceedings, International Conference on Automated Planning and Scheduling (ICAPS), Sydney, Australia, Sept. 2008.

I would like to end with a formal disclaimer –I do not work for NASA (I’m employed by a contracting company — Stinger Ghaffarian Technologies Inc.), and this blog is written as a personal project.

.

Watching my 10 month old daughter Violet try to take her first steps, this post really resonates with me. Violet *never* looks at her feet. She happily stands on our bed, in my lap or on the floor all the while doing countless adjustments to her balance and still is holding on to our hands. I can’t imagine trying to impart even a fraction of this complexity in software.

Needless to say, I really enjoyed this post!

If you notice a fish in a aquarium tank, its jaw will open and close ryhmically and is tail will swish. Even in a restful state there is a act of breathing 12 time per minute. The lungs and stomach have huge changes in presure during inspiration and expiration, and this must be carried out with minimum energy. Our design of the lungs has evolved from fishes and must be enery efficeint.

The practice of meditaion or yoga according to me , ( my own hypothesis ) tries to create awarenes of our internal working so that we can learn(just like we train AI machines ) to have a breathing pattern that minimize this energy requirement required to support activities at rest , ie when we are not in a STRESSFUL state .

Yes! Thanks for this great feedback! And one of the things i’m interested in thinking about is how we do things like move our arms and legs to precise locations given that our torso is in constant motion with our breath. As an example, if you just hold your arm out at length and focus on your breathing for a moment, you will notice that your hand is actually moving up and down with your breath, yet you can also “hold it still” in space — neat trick that is not addressed by current approaches to robotics.